Google Cloud Next 2025: Exclusive AI Announcements Straight from Las Vegas

Not long ago, in cooperation with AppSatori, we hosted a webinar for our developers and Škoda Auto colleagues to relay the freshest takeaways from Google Cloud Next 2025 in Las Vegas. The keynote speaker was Ivan Kutil—Google Developer Expert and AppSatori CTO—who attended the conference in person for the sixth time.

Google’s end-to-end AI stack

Google outlined its full AI stack—a layered ecosystem spanning hardware, models, and developer tools. At the base sits the AI Hypercomputer with custom TPU chips; above that run large language models (notably Gemini); next come services like Vertex AI; and at the top are programmable agents that integrate into your apps.

For 2025, Google plans to invest $75B in infrastructure: data centers, AI chips, and networking—including the world’s most extensive subsea cable network.

The new 7th-gen TPUs deliver up to 3,600× the compute of the original 2008 version—well past Moore’s law. Fun fact: Google uses AI-assisted tools to design these chips.

Google also announced Cloud WAN, opening Google’s private global network to customers for more efficient—and above all secure—inter-region data transfer without traversing the public internet.

Gemini 2.5 Pro: accuracy and reasoning

The latest Gemini 2.5 Pro brings major gains in answer accuracy and multimodal capability. In Google’s internal benchmarks (e.g., Flash), models deliver up to 24× “smarter” responses per dollar compared to alternatives.

A standout is the new “thinking” capability: instead of a single forward pass, the model uses reinforcement learning and up to 1,000× more compute to iterate multiple passes. Teams can set a thinking budget—higher budget equals more accurate (but slower) responses.

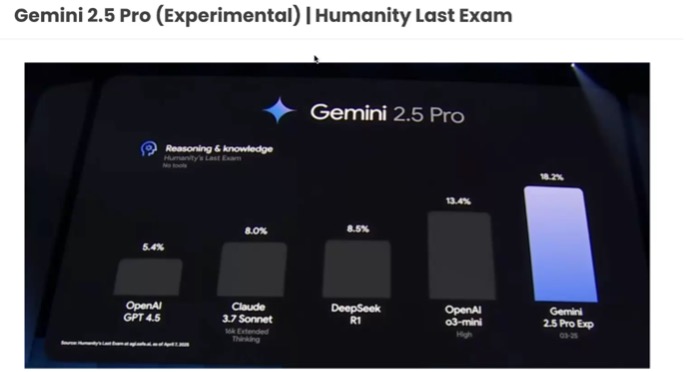

In the Humanity Last Exam (~1,000 never-published questions), Gemini 2.5 Pro reportedly reaches 18.2% accuracy, outperforming peers—valuable because it tests generalization beyond training data.

Multimodal in practice

Highlights from the webinar demos:

- Interactive image analysis: from a single photo, the model returns step-by-step guidance and illustrative images (e.g., how to open a specific door).

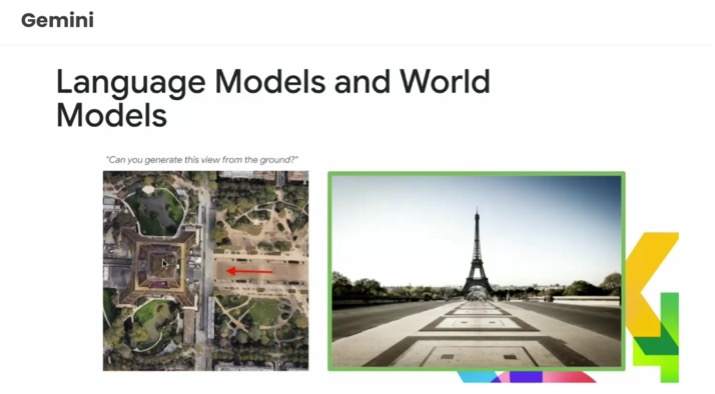

- View transformation: from an aerial image, generate a ground-level view from any arrow-marked spot (e.g., toward the Eiffel Tower).

- Google VEO 2: video generation with advanced camera-motion control and smooth transitions between stills.

- Robotics integration (experimental): translate natural-language commands (“pick up this orange and store it”) into instructions for a robotic arm.

Vertex AI: what’s new

Usage of Vertex AI has grown 20× year-over-year. New features include:

- Global endpoint: smart routing to the optimal region (lower latency, fewer quota issues).

- Context caching: cache static prompt parts to cut costs by tens of percent.

- Controlled generation: structured JSON outputs enforced by schema.

- Grounding: anchor answers to enterprise data (Vertex AI Search), Google Search, Google Maps (250M+ places), and now sources like ElasticSearch. A standout demo mapped all relationships in Les Misérables into an interactive graph.

AI agents: automation & integration

Agents were the headliner:

- Project Mariner: Chrome extension for autonomous web control (private preview).

- Web agents: voice-controlled browsing running in-browser on Gemini Nano, without sending data server-side.

- Agent-to-Agent protocol: a new standard for agent communication (with 50 partners). Unlike Anthropic’s MCP, this focuses on direct agent messaging.

- Agent Developer Kit (ADK): open-source framework for building agents with enterprise data, long-running jobs, 100+ connectors, Vertex Search/RAG integration, multi-model support, and MCP compatibility.

- Google Agent Space: no-code app to use/build agents in one UI, integrating with Google Drive, Microsoft Word, Outlook, and more.

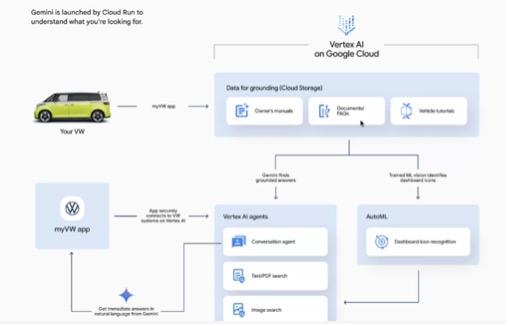

A practical case from Volkswagen showed Vertex AI Search powering manual lookup and warning-light identification from photos in their mobile app.

Tools for developers & data teams

- Code Assist Kanban: mention a task in a Google Doc and an AI agent adds it to a Kanban board and works it through.

- Firebase Studio (formerly IDX.dev): generate a full app (front-end + back-end) from text and deploy instantly—no coding required.

- Cloud Run with GPUs: fast model deployment with GPU acceleration (e.g., Llama-2 13B can cold-start in ~30s).

- Weather API: new global weather predictions with Czech Republic support.

For data analysts: BigQuery with data agents, personalized notifications using embeddings, and Geospatial Engine for geo-analytics.

More notable updates

- Podcast Generation API: create podcasts from text—for example, personalized morning briefings.

- Improved TTS voices: synthesize a voice from 10 seconds of audio (Czech not yet supported, but expected later).

- Google Distributed Cloud: run Google tech (including Gemini and Agent Space) on-prem, either connected or fully air-gapped.

- Google Build Program: developer subscriptions at $300 or $900/month, with credits (up to $1,800/year) and perks like upgraded Code Assist and training access.

Wrap-up

The webinar delivered a comprehensive look at the innovations unveiled at Google Cloud Next 2025. Thanks to first-hand insights from Ivan Kutil, we gained both technical depth and a practical view of applicability. Tracking these trends together helps Green:Code keep pace with the rapidly evolving AI landscape and collaborate effectively with partners like Škoda Auto.

.jpg)

.jpg)

.jpg)

.jpg)